Deep learning is a subset of machine learning that uses artificial neural networks to to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience.

The Basic Architecture of Neural Networks

Perceptron- single-layer neural networks

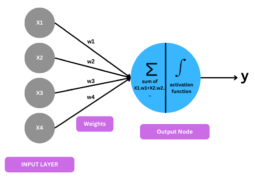

There are different layers of neural network. The basic one or the simplest one is called the Perceptron which has only one layer. This neural network contains a single input layer and an output node.

where each X = [x1,…xd] contains d feature variables, and y ∈{−1, +1}

Components of Perceptron

- Input Layer: The input layer consists of the input that we provide(for eg. In case we want the model to predict height with input has height then here height would be the input)

- Weights:

Each input neuron is assigned a weight at the start. - Bias:

The bias unit helps the network make predictions by adjusting the final output of the neural network, even when all input values are 0. Bias is added to the summation. - Activation:

Function: The activation function determines the output of the perceptron based on the weighted sum of the inputs and the bias term. Common activation functions used in perceptrons include the step

function, sigmoid function, and ReLU function. - Output:

The output of the perceptron is a single binary value, either 0 or 1. - Training Algorithm: The perceptron is typically trained using a supervised learning algorithm such as the perceptron learning algorithm or backpropagation.

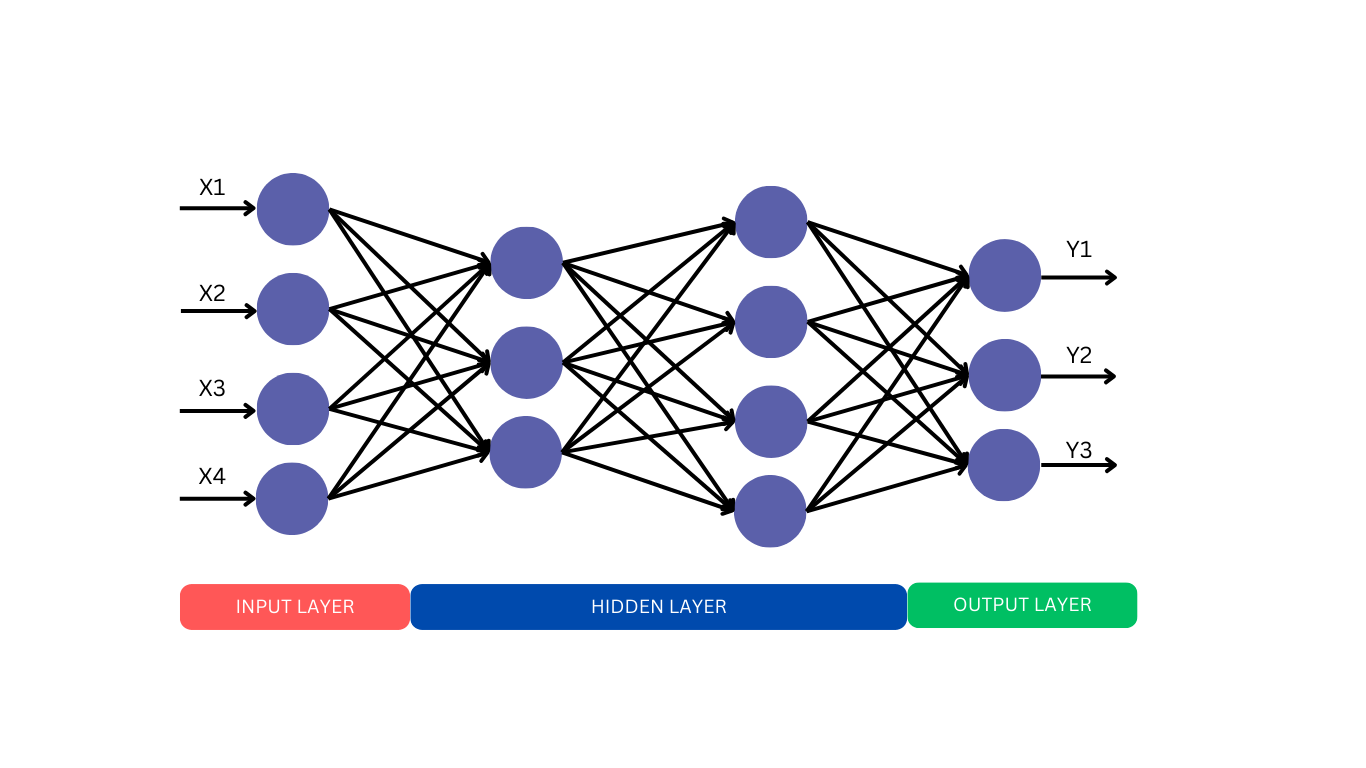

Multilayered Neural Network

In the above multilayered neural network we have 4 inputs and 3 components of the first middle layer. So we will initialize 4×3 i.e. 12 weights. Similarly in the second hidden layer we have 4 components so again 3×4

i.e. 12 weights will be initialized.

Here all the calculations can be done simultaneously and so we need a laptop with a good GPU which will help

with the simultaneous calculations. The number of weights is known as parameters. Like in GPT3 we have 175 billion parameters.

Comments